timeline

title Some (online Data) Context

2001: Google Earth

: satellite & aerial

2004: OpenStreetMap

: GIS

: crowd sourcing

2005: Google Map

: GIS

2006: Twitter

: Geolocated multimedia posts

2007: Google Street View

: Road Scenes

2013: Mapillary

: Road scenes

: crowd sourcing

Geotagging of Objects

Neural Networks based Solutions for Geotagging of Objects

2025-04-08

Introduction

Introduction

timeline

title My research: imagery & GIS

1998-2001: PhD

: Road scene video analysis

2014-2018: GraiSearch (FP7)

: Social media

2016-2020: Bonseyes (H2020)

: A.I. CNN

2017: Eir

: Telegraph poles in ROI

: road scene imagery (GSV)

2018: OSI

: aerial imagery

: roof tops

2019-.: aimapit.com

: collaborator

Introduction

Motivations:

- Infrastructure maintenance

- Infrastructure compliance (safety)

- Planning (e.g. EV public chargers deployment)

- Autonomous robotics

- Bio diversity monitoring

- etc.

Focus of this talk:

Static Objectgeotagging e.g. traffic signs, poles, trees (but \(\neq\) cars or pedestrians)

Early works

Detection of Changing Objects in Camera-in-Motion Video

DOI:10.1109/ICIP.2001.959126 (Dahyot, Charbonnier, and Heitz 2001)

Early works

\(\Rightarrow\) low dimensional feature engineering (straight edges)

\(\Rightarrow\) pixel positions \(\color{green}{(x_i,y_i)}\) used in feature computation (\(\cong\) positional encoding)

\(\Rightarrow\) P.d.f. modelled with histograms or KDE.

Shape descriptors at pixel \(i\) with position \((x_i,y_i)\) in image \(I\) with derivatives \(I_x\) and \(I_y\): \[ \left\lbrace \begin{array}{l} \|\nabla I_i\|=\sqrt{I^2_x(x_i,y_i)+I^2_y(x_i,y_i)}\\ \theta_i=\arctan\left( \frac{I_y(x_i,y_i)}{I_x(x_i,y_i)}\right)\\ \rho_i= \color{green}{x_i} \cdot \frac{I_x(x_i,y_i)}{\|\nabla I_i\|} + \color{green}{y_i} \cdot \frac{I_y(x_i,y_i)}{\|\nabla I_i\|} \end{array} \right. \] Hough Transform estimate: \[ \hat{p}(\theta,\rho)=\frac{1}{N}\sum_{i=1}^N \frac{1}{h_{\theta_i}} k_{\theta}\left(\frac{\theta-\theta_i}{h_{\theta_i}}\right)\ R_i(\theta,\rho) \]

DOI:10.1109/TPAMI.2008.288 (Dahyot 2009)

Early works

Robust Object Recognition

DOI:10.1109/CVPR.2000.855886 (Dahyot, Charbonnier, and Heitz 2000)

Early works

Extension to object detection:

- several robust scores (based M-estimators) computed with a sliding window

- Bayesian probability interpretation of these robust scores.

- method robust to partial occlusion and cluttered background

Pattern Analysis & Applications DOI:10.1007/s10044-004-0230-5 (Dahyot, Charbonnier, and Heitz 2004)

Early works

Viz Unreal engine:

- NN score sentiment analysis of

tweet text(colour lights: blue=sad; yellow=happy) tweet imagegeolocation+orientation inferred by feature matching against GSV to findtweet imageGPS and orientation

- 3D city construction using OSM and GSV data.

DOI:10.1016/j.cag.2017.01.005 (Bulbul and Dahyot 2017)

Geotagging of Objects

Remote Sensing DOI:10.3390/rs10050661 (V. Krylov, Kenny, and Dahyot 2018)

Geotagging of Objects

Remote Sensing DOI:10.3390/rs10050661 (V. Krylov, Kenny, and Dahyot 2018)

Geotagging of Objects

MRF energy to minimize is formulated as: \[ \mathcal{U}(\mathbf{z}=\lbrace z_1,\cdots,z_{N_{\mathcal{Z}}}\rbrace,\lbrace\alpha\rbrace)=\sum_{i=1}^{N_{\mathcal{Z}}} \sum_{j} \alpha_j \ \underbrace{u_j(z_i) }_{\text{energy term } j} \]

- Each image detection(s) corresponds to a ray with origin camera GPS and direction derived from pixel location and camera orientation

- Any pair of intersecting rays (from pair of images) define a site (intersection) \(i\) as potential candidate for object geolocation. \(N_{\mathcal{Z}}\) is the total number of candidate sites extracted from a collection of images.

- \(z_i\) at site \(i\) is a binary variable:

- \(z_i=0\) :

object is absent - \(z_i=1\) :

object is present

- \(z_i=0\) :

Remote Sensing DOI:10.3390/rs10050661 (V. Krylov, Kenny, and Dahyot 2018)

Geotagging of Objects

Energy terms \(u_j(\cdot)\):

One that enforces consistency with the depth estimation.

A pairwise energy term that depends on the current state \(z\) and those of its neighbours \(\lbrace z_k \rbrace\), is introduced to enforce one detection from multiple sites clustered together.

An energy term penalizes rays that have no positive intersections: false positives or objects discovered from a single camera position.

MRF optimization:

- Energy minimization computed with an iterative conditional modes (ICM) algorithm

- Initial state for ICM is set as \(z_i=0,\forall i\) (all sites are empty)

Remote Sensing DOI:10.3390/rs10050661 (V. Krylov, Kenny, and Dahyot 2018)

Geotagging of Objects

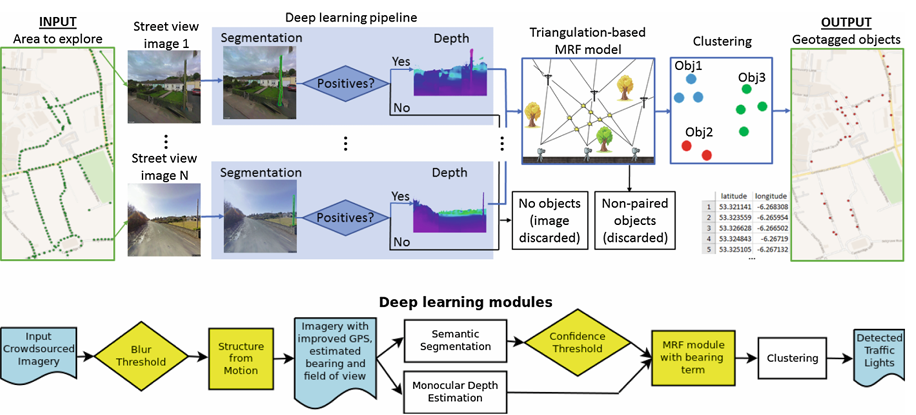

Preprocessing:

- Clustering: Ray intersections are only considered when in a max distance of 25 meters from the origins of the rays (camera GPS)

Postprocessing:

- Clustering: Locations of positive sites found in the same vicinity (radius=1 meter) are averaged to obtain the final unique object’s geotag.

Remote Sensing DOI:10.3390/rs10050661 (V. Krylov, Kenny, and Dahyot 2018)

Geotagging of Objects

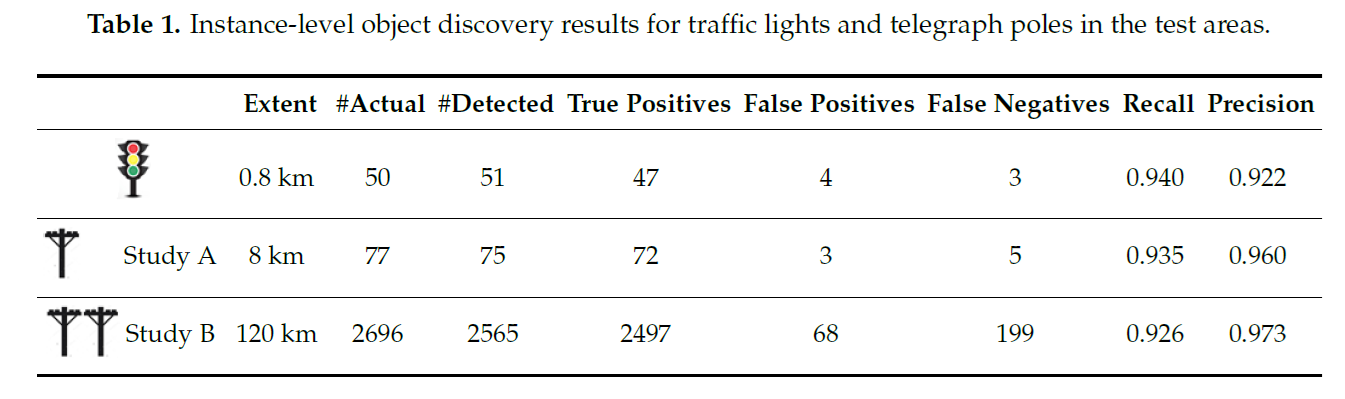

Evaluation:

- Object Detection

Remote Sensing DOI:10.3390/rs10050661 (V. Krylov, Kenny, and Dahyot 2018)

Geotagging of Objects

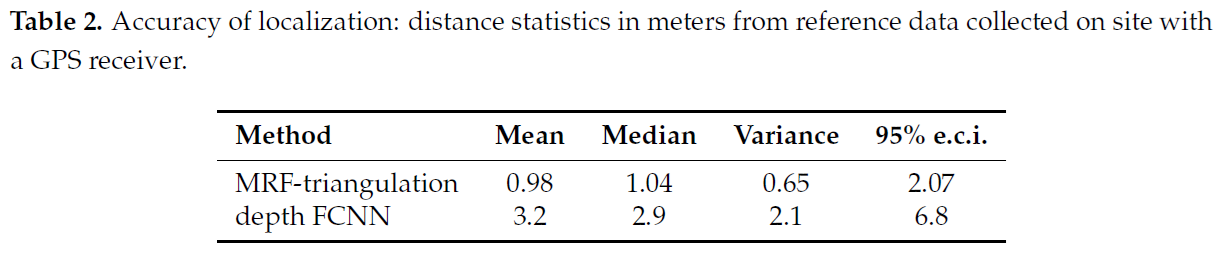

Evaluation:

- Absolute GPS positions against ground truth

Remote Sensing DOI:10.3390/rs10050661 (V. Krylov, Kenny, and Dahyot 2018)

Geotagging of Objects

Remote Sensing DOI:10.3390/rs10050661 (V. Krylov, Kenny, and Dahyot 2018)

Geotagging of Objects

- Detection in Lidar point cloud performed by template matching using a pole-like object template (false alarm rate: high).

- The MRF energy is modified by adding a new term to take into account Lidar candidate locations near each of the MRF sites.

DOI:10.1109/ICIP.2018.8451458 (V. A. Krylov and Dahyot 2018)

Geotagging of Objects

Evaluation was also performed with Mapillary crowdsourced images (bottom pipeline) instead of GSV (top pipeline for reference). Additional preprocessing is needed to inferred the missing image metadata in Mapilliary.

ECML workshop 2019 (V. A. Krylov and Dahyot 2019)

Geotagging of Objects

- Pre-processing: Enhancing quality of the GSV image metadata using Structure from Motion (Camera translation T and/or rotation R estimation).

- Postprocessing: predicted object geolocation is further refined by imposing contextual geographic information extracted from OSM.

| Image metadata correction | Actual | Detected | TP | Precision\(\uparrow\) | Recall\(\uparrow\) | F-measure\(\uparrow\) | Error in meters \(\downarrow\) | Error in meters (with OSM) \(\downarrow\) |

|---|---|---|---|---|---|---|---|---|

| None | 76 | 94 | 58 | 0.61 | 0.76 | 0.68 | 2.71 | 2.64 |

| T | 76 | 89 | 57 | 0.64 | 0.75 | 0.69 | 2.79 | 2.74 |

| R and T | 76 | 92 | 54 | 0.57 | 0.72 | 0.64 | 2.53 | 2.48 |

IMVIP 2021 (Liu et al. 2021) + patent 2023 (Liu, Ulicny, and Dahyot 2023)

Geotagging of Objects

Research out of the lab!

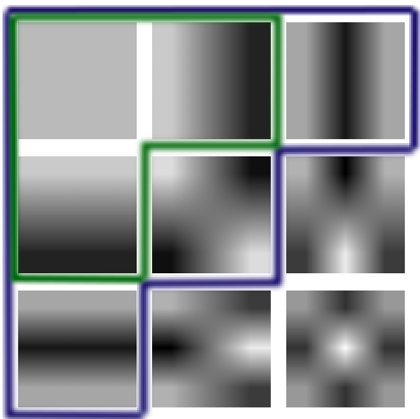

Improving DNNs

In CNN, filter weights \(\lbrace \alpha_i\rbrace\) are learnt: \[ \text{filter for convolution}=\sum_i \alpha_i \ \mathbf{e}_i \\ \text{with} \ \lbrace \mathbf{e}_i\rbrace \equiv \text{natural basis} \]

We propose Harmonic CNN layer, where the natural basis is replaced by DCT basis,that:

- replace conventional convolutional layers to produce harmonic versions of existing CNN architectures,

- can be efficiently compressed by truncating high-frequency components,

- has been validated extensively for image classification, object detection and semantic segmentation applications.

DOI:10.1016/j.patcog.2022.108707 (Ulicny, Krylov, and Dahyot 2022)

Improving DNNs

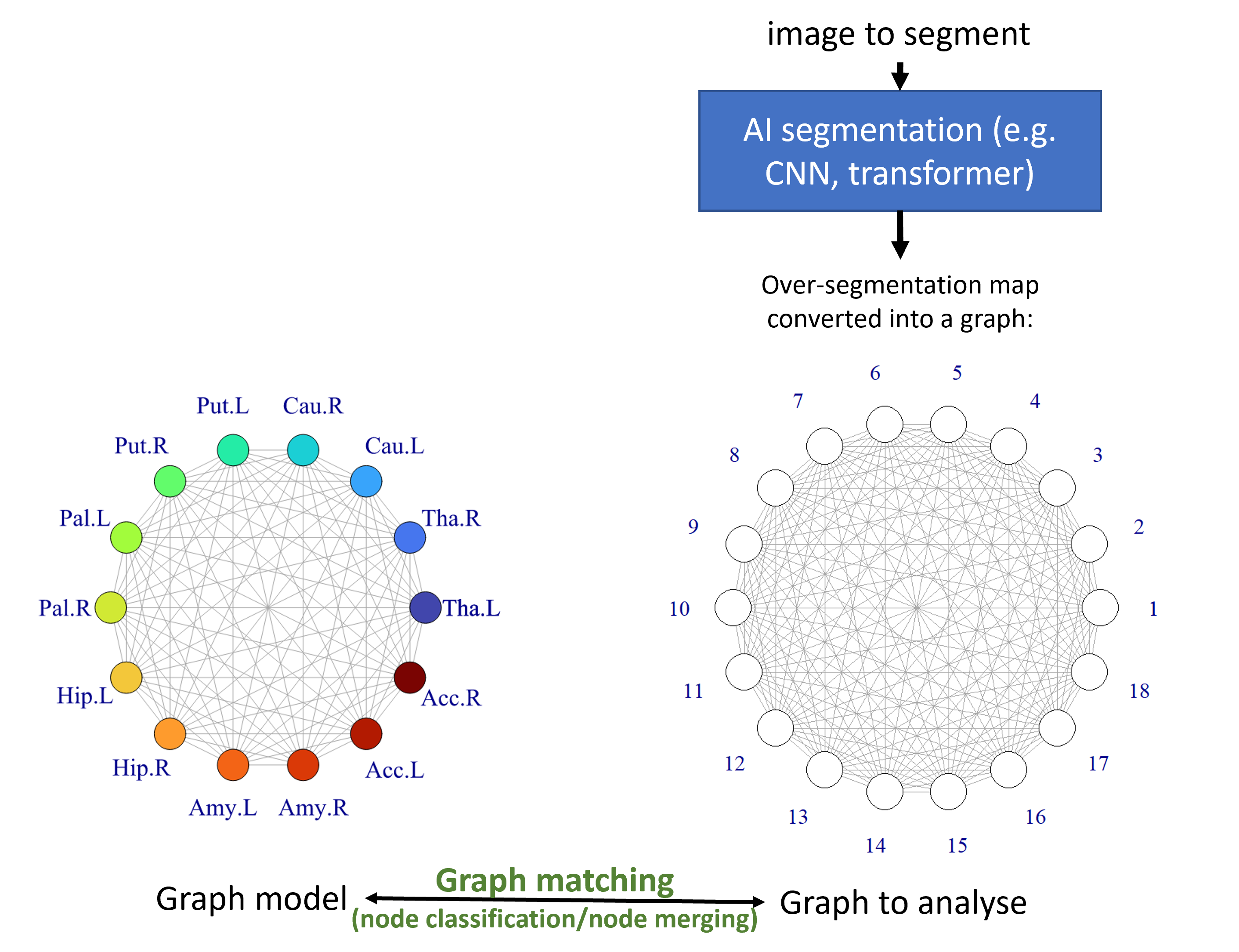

Graph matching as postprocessing of DNN segmentation results. Example for 3D segmentation of brain (IBSR dataset):

DOI:10.1016/j.cviu.2023.103744 (Chopin et al. 2023)

Summary

We presented a modular pipeline for object geotagging:

Each module is optimised individually (not end-to-end).

Image segmentation and depth estimation are performed with DNNs

DNN based modules have been updated overtime (i.e. architecture changes).

Data quality is very important (e.g. metadata) for geotagging accuracy.

For creating a new training dataset for a new object of interest, we have taken advantages of multiple approaches (e.g. vintage Computer Vision approach, or AIs e.g. SAM)

MRF provides a flexible formalism to take advantage of multiple sources of information.

Thank you! Any Questions?

Many thanks to all my collaborators, past and present!

Check out V. Krylov’s most recent paper on roadside object geolocation (Ahmad and Krylov 2024, EUSIPCO 2024)